Some time ago, I was tasked to create a support chatbot to deal with users’ requests related to VM creation and getting VPN permissions.

The main goal was to prepare parameters to process users’ requests. The chatbot should:

— Understand the users’ request and determine the group of the request.

— Prepare parameters for the support team

— Send mail to the support team or create a task in the manage program

I know, you might think it’s not the most complicated task, but if you want your chatbot to understand requests written in natural language, this task becomes more challenging.

Is there any system that has already nailed its grasp of NLP?

The answer is yes. OpenAI models! As we are talking about OpenAI’s GPT (Generative Pre-trained Transformer) models undergo training to comprehend both natural language and code.

GPTs produce textual responses based on the provided inputs, often called ‘prompts’. We need to keep in mind that ChatGPT is a large language model (LLM) is a type of machine learning model.

A language model is a specific artificial intelligence model. It undergoes training to comprehend and generate human language. LLM belongs to a particular category of language models characterized by a notably higher number of parameters compared to traditional models. They are trained on vast datasets to predict the following token in a sentence, considering the context. After training, an LLM can undergo fine-tuning to excel in various Natural Language Processing (NLP) tasks. A large language model employs deep neural networks to produce outputs by recognizing patterns gleaned from training data. It can perform a variety of natural language processing (NLP) tasks such as generating and classifying text, answering questions in a conversational manner, and translating text from one language to another.

LLMs’ examples:

- GPT-3.5 / GPT-4 (Generative Pretrained Transformer) – from OpenAI.

- BERT (Bidirectional Encoder Representations from Transformers) – from Google.

- RoBERTa (Robustly Optimized BERT Approach) – from Facebook AI.

- Megatron-Turing – from NVIDIA

My choice for this case was OpenAI.

The OpenAI API is highly versatile and applicable to a wide array of tasks involving understanding or creating natural language and code. Moreover, the API facilitates image generation and editing, as well as speech-to-text conversion. It provides a diverse range of models, each with distinct capabilities and pricing options.

There are some GPT models developed by OpenAI.

- GPT-3.5 models can comprehend and generate both natural language and code. Among the GPT-3.5 variants, gpt-3.5-turbo stands out as the most proficient and cost-effective model. It is specifically fine-tuned for chat applications through the Chat completions API, yet it also performs effectively in conventional completion tasks.

- GPT-4 currently processes text inputs and produces corresponding text outputs. This model stands out in effectively tackling intricate problems, showcasing superior accuracy in comparison to our prior models. Much like gpt-3.5-turbo, GPT-4 is finely calibrated for chat-based applications and delivers excellent performance in typical completion tasks through the Chat completions API.

- GPT base models possess the ability to comprehend and generate both natural language and code, albeit without specific instruction following training. These models are designed as alternatives to our initial GPT-3 base models and utilize the traditional Completions API.

- DALL·E is an AI system capable of generating realistic images and artwork based on a natural language description.

- Embeddings are numerical representations of text, enabling the assessment of the correlation between two blocks of text. OpenAI’s latest embedding model, text-embedding-ada-002, represents a second-generation advancement intended to supplant the 16 initial first-generation embedding models.

- Moderation models are created to validate content adherence to OpenAI’s usage policies. These models offer classification features to identify content falling into specific categories, including hate, threatening language, self-harm, sexual content, content involving minors, violence, and graphic violence.

You can engage with the API using HTTP requests from any programming language, leveraging either OpenAI official Python bindings or OpenAI official Node.js library. The OpenAI API employs API keys for authentication. Access your API key from the API Keys page, which you’ll utilize in your requests. Remember that your API key is a secret!

The GPT-3.5 model “gpt-3.5-turbo” was chosen to build a chatbot. After conducting extensive research on various models, it was determined that “gpt-3.5-turbo” provided the best balance between results and cost.

And here we are at the first step.

First Step

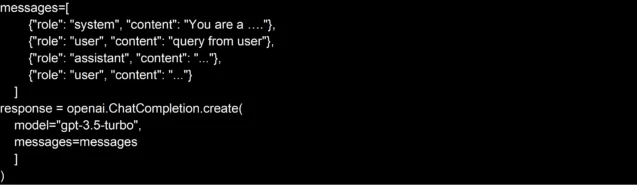

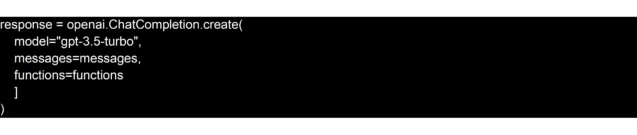

Let’s see what the opportunities we have. To use the models, I need to send a request with messages and an API key after that you should get a response with the answer from a model. I’m a Python developer and I chose the official Python library from OpenAI to use API as strange as it seemed. The library has the Chat completions API object and call that looks like the following example:

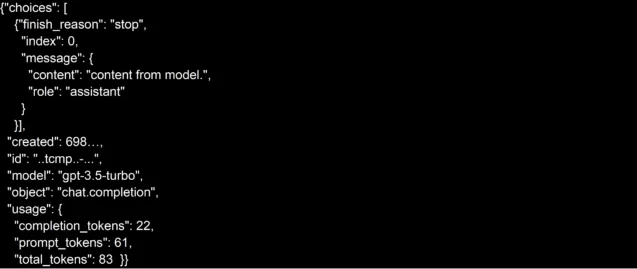

The GPT model response has a structure:

To solve this problem I used the feature with the name Function calling.

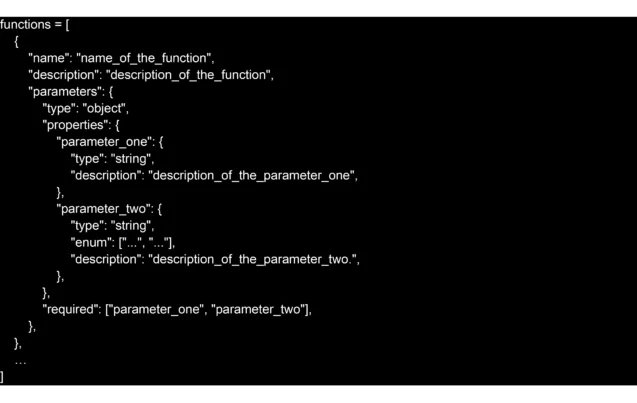

To use the feature Function calling, I need to create a list with schemas of functions. The example following:

After creating the list of functions structures I added them in the call of Chat completions API.

I created distinct functions for various groups, such as one for VM creation. Each one is tailored to its specific group’s needs. The functions have unique content for the group.

At the end of the first step, I had the user request and the chatbot call to the model with the prepared context, user query, and a list of function specifications. The model chooses the function and generates a JSON object containing the arguments needed to invoke those functions.

The function specification from official documentation for example:

The model selects the function using the prepared context and returns a response in JSON format.

The main algorithm for working with a model looks like this:

- Invoke the model by passing the user’s query and a predefined set of functions through the ‘functions’ parameter.

- The model can choose a function, and if it does, the resulting content will be a JSON object, following a specified custom schema.

- In your code, parse the string into JSON, and if the provided arguments exist, proceed to call your function using those arguments.

To oversee functions, I developed the main module. The principal module of the chatbot contains the common content for it.

The chatbot’s main module was designed with the feature to get the different functions for different groups of requests.

Second Step

The second step is the preparation of parameters for the support team.

The group function has unique content to determine the parameters from a user query. Functions can ask additional questions and even have an additional function for the GPT model to prepare the parameters correctly.

Moreover, to confirm the parameters I’m using a general function. The function asks a user to confirm. Since the model response is probabilistic, I created a function to confirm using the classical techniques.

So, after responding to the module, I had the JSON arguments with parameters that needed to be parsed and validated. To parse and validate the parameters, I opted to use “Pydantic”, which is proficient at it.

Third Step

And finally, inform the support team that there is an issue.

To inform the support team I used the same way Function calling.

I used different ways to communicate with the support team for it I created different functions and gave the user a choice of the way to inform the support team. But in this step, I have all the parameters for the issue, and the GPT model just chooses to inform the way.

Conclusion

The main idea for creating the chatbot was to use natural language in the user’s query and build the content in messages for the model. The model used gave good results in choosing the functions and preparing data for a support team. Still, content should be prepared correctly, and “prompts” written using recommendations from the OpenAI team. Well to create the chatbot you have to do only three simple steps.

Elinext: Your go-to Python development partner.

We specialize in crafting tailored software solutions for businesses, leveraging the versatility of Python. Our expert team delivers robust, scalable applications that drive innovation and business success. From web and mobile apps to complex enterprise systems, Elinext is committed to excellence, ensuring timely and budget-friendly solutions for startups to large enterprises.