This article is written by Elinext’s software developer Ivan Polyakov. It covers AWS KinesisStreams, which if we had to describe it in a few words, is a scalable real-time streaming service for collecting and processing extremely large amounts of data. Simple, yet promising for many tasks that require transmitting and operating big data volumes.

This is a review of the service, where we highlight the moments of using it for the projects that require high loads of data.

The service landing page gives us a general impression on what it is all about, so let’s take a closer look.

Kinesis is actually split into 4 specific similar services :

Video Streams makes it easy to securely stream video from connected devices to AWS for analytics, machine learning (ML), and other processing.

Data Streams is a scalable and durable real-time data streaming service that can continuously capture gigabytes of data per second from hundreds of thousands of sources.

Data Firehose is the easiest way to capture, transform, and load data streams into AWS data stores for near real-time analytics with existing business intelligence tools.

Data Analytics is the easiest way to process data streams in real time with SQL or Java without having to learn new programming languages or processing frameworks.

In this article, we’ll highlight Data Streams (DS) and Data Firehose (DF) services. They cover all the needs for further reviewable cases.

Common situation

Usually, if spec for a project does not require architecture to deal with high loading, this aspect often gets overlooked. This is especially true in cases when budget and time frame for development are limited.

In most cases. incoming data goes directly to the app, which processes it, does certain actions and stores the data into the database. Everything works fine until the “sudden popularity” hits the project, and the collected amount of data become too large for the processing.

Then the app begins working way too slowly and consumes a lot of memory. Parts of incoming data usually get lost.

Often first counter-actions taken against it are upgrading the hardware and doing some code refactoring. It helps for a certain period of time, usually for a day, or two 🙂 Then incoming data increases 100x times and transfers into millions of requests a day and gigabytes of data.

The thing that could help now is upgrading the architecture to the one that can handle high loading. KinesisStream service will be considered as one of the possible ways to achieve it. We’ll give you the pros and cons of using the service below.

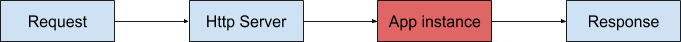

Architecture without KinesisStreams

First of all, we have to mention, that only some common approaches improving different processes will be reviewed. Let’s begin with analyzing the main issue in the details.

The HTTP server creates a process in the system, i.e. Ubuntu for dealing with requests. The process consumes CPU time and memory. Then another request comes at the same time and it has to be processed as well. The more App instance needs CPU and memory, the fewer requests can be handled by the server.

Oftentimes, only one instance can consume all the CPU time and all the memory. When we talk about the millions request, updating the hardware or moving to the cloud is often too expensive of an option.

Let’s have a look at the situation inside App instance. Processing a request usually goes in many steps. Most of the steps work fast and do not require optimization. Several other steps do depend on some conditions.

Those conditions are incoming data or data from the database that need to be processed. These steps, should they work really slow, block the other steps from operating with the normal speed. So we have to do something with the problematic steps that slow the pace.

Reading and writing from and to the database

The problems might occur while reading from the database and writing to it. In respect of hundreds of gigabytes of data, these steps can be a headache. The queries have to be optimized.

If not, well, just FYI, 1 non-optimized second of waiting becomes 11 days of waiting for a million requests.

Separating reading and writing operations using database replication is a good solution to the problem. If the readable data is not very sensitive to a fresh state, the replica will increase the performance dramatically.

After that, it could be useful to cache complex queries selection and calculation results. The latter could be simply stored in memory. Regarding complex queries, there could be difficulties on the way.

As for databases, there could be such a mechanism as views that can hold the pre-selected rows. Thus it simplifies selection for us.

Moving data to a specific database

Specific databases are designed for doing specific things. Thus, selecting the correct database for storing and operating part of app data could significantly improve the performance.

For instance, ElasticSearch can be considered for searching for some documents, ClickHouse is great for keeping data for statistics, and Reddis works perfectly for storage of the pre-selected cached results.

We could go on with specific databases (as there are plenty), but this is a topic for another article.

Splitting App execution steps into microservices

This is by no means an easy process for the existing apps. However, it is worth the try as microservices handle high data loading very well. Slow steps are moved to the background from the main execution chain or being scaled with the additional resources. We’ll review this approach in details in the context of Kinesis DataStream.

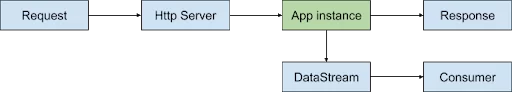

Architecture using Data Streams

In this architecture, all the data collection will be handled by DataStream. It is not required to split all the steps into microservices as we can do the data processing step only.

For this, we need to implement Provider and Consumer for our DataStream to put in and read the data accordingly.

Now, the steps will look like that :

In this diagram, the Provider is the App instance, which uses the AWS SDK internally to send the data.

Once the data is sent, the response can be returned. There is no need for us to wait for the data processing step to end. Thus, we have solved the main problem, which was moving the slow step out of the main chain of steps.

But what about DS? How does it handle big amount of data? To control the throughput, DS uses so-called shards. The official Amazon documentation explains it as follows: “A shard is the base throughput unit of an Amazon Kinesis data stream. One shard can ingest up to 1000 data records per second, or 1MB/sec. To increase your ingestion capability, add more shards.”

So we can control the throughput by adding or removing shards depending on the level of data load.

And now, it is up to Amazon to provide the required capacity for this step.

So the data in a DS. But it still needs to be processed. For this step, we need to create a Consumer.

It can be a piece of code that constantly gets stored in DS data and processes it. The more interesting solution is to add the Lambda function that will do all the processing. Once data comes, the function is triggered. Thus, both storing and processing operations are moved to Amazon services with auto-scaling.

What kind of data can use this approach?

Pretty much any data that doesn’t need to be returned to the user as a response.

Here is a nice example when it could be useful. The app collects the large amounts of data for statistics which needs to be analyzed, compacted and stored into many tables in the database. Such operations can be time-consuming and it is better to do it in the background. That is achieved thanks to the DS service.

Architecture using Data Firehouse

It is pretty much the same. The only difference is in that instead of a consumer, we use one of Amazon data storages (S3, Redshift, Elasticsearch, and Splunk).

So, if you need to process and store images or logs on S3, you send it to a DF and it will be done in the background. The App would not have to be waiting for this operation to proceed.

You might wonder, how can it do all the processing if DS just sends the data to one of the storages?

Like in the case with DS, Lambda function can be added to the pre-process before storing it.

Any sample?

First of all, you need to create a DataStream in your AWS account. It is fairly simple, just open Kinesis service in AWS console, click “create” button and Amazon will guide you through the process.

Also, you can check the official guide to read about the available options. Once done, you can use the code below to play with it.

Provider ( PHP ) :

require ‘vendor/autoload.php’;

use Aws\Credentials\CredentialProvider;

use Aws\SecretsManager\SecretsManagerClient;

use Aws\Kinesis\KinesisClient;

use Aws\Firehose\FirehoseClient;

$profile = ‘default’;

$path = ‘aws/credentials’;

$provider = CredentialProvider::ini($profile, $path);

$provider = CredentialProvider::memoize($provider);

// Kinesis DataStream case.

$client = KinesisClient::factory([

‘version’ => ‘latest’,

‘region’ => ‘us-east-2’,

‘credentials’ => $provider

]);

$result = $client->putRecords(array(

‘StreamName’ => ‘MyFirstKStream’, // name of a stream you have created.

‘Records’ => array(

array(

‘Data’ => uniqid(), // data to send.

‘PartitionKey’ => ‘shardId-000000000000’,

),

),

));

// Kinesis Firehose case.

$client = FirehoseClient::factory([

‘version’ => ‘latest’,

‘region’ => ‘us-east-2’,

‘credentials’ => $provider

]);

$result = $client->putRecord(array(

‘DeliveryStreamName’ => ‘MyFirstDStream’, // name of a stream you have created.

‘Record’ => array(

‘Data’ => uniqid(), // data to send.

),

));

Consumer ( Lambda node.js ) :

console.log(‘Loading function’);

exports.handler = async (event, context) => {

let success = 0;

let failure = 0;

const output = event.records.map((record) => {

/* Data is base64 encoded, so decode here */

// const recordData = Buffer.from(record.data, ‘base64’);

console.log(record);

try {

/*

* Note: Write logic here to deliver the record data to the

* destination of your choice

*/

success++;

return {

recordId: record.recordId,

data: record.data,

result: ‘Ok’,

};

} catch (err) {

failure++;

return {

recordId: record.recordId,

result: ‘DeliveryFailed’,

};

}

});

console.log(`Successful delivered records ${success}, Failed delivered records ${failure}.`);

return { records: output };

};

Conclusion

AWS KinesisStreams provide a possibility to integrate data processing services into the app in a quick way with minimum efforts.

It allows forgetting about hardware updates. You just remain focused on App architecture and business processes. But, it does not mean that you can put DS everywhere just to have it 🙂 Well, you can but it will cost you. Currently, Shard Hour (1MB/second ingress, 2MB/second egress) costs $0.015.

Also, keep in mind that having microservices architecture on Amazon requires some experience of working with it. Thoughtless use of Amazon services could be very expensive.